Data Insight = Quantitative Analysis = What a user does

In my previous post on user insight I discussed the qualitative measurements that expressed the 'why' behind a users decisions. Qualitative insight is always valuable, but only takes a small percentage of your user base. As mentioned previously there is ALWAYS a difference between what people say they do, what they do when observed and what they actually do.

Quantitative Data 🔢

Quantitative Data helps to identify opportunities and measure success by learning what people actually do when interacting with a product.

Success means something different to everyone especially each company you work for. It's important to understand how your company keeps score. This is usually done through their success metrics. When you join a new company the first thing you should find out is how they measure success and what their metrics are for doing this.

Metrics will be prioritized by the company's leadership team in terms of data that's most important to the businesses revenue and growth. This usually means the most important metric for most businesses is user growth. Although, I feel retention and revenue should coincide with user growth because it's great to grow your user base, but you need to retain them and keep up with revenue.

Once you've been able to establish the companies success metrics another important part to discuss with your team is how they drive these metrics. You'll need to understand what changes have been made and what needs to come into the pipeline to deliver results they wish to see.

A product's team success metrics should align with the company's goals. For example, if a company's goal is to retain users and you're working on winning new customers you are marching in different directions. A common success metric for a start-up is brand awareness so your product's team success metric should include the number of times your app is downloaded or visits to your website. Basically, any metric that shows people they are aware of your product.

Don't forget to review the product's team success metrics regularly. Success will change as the product evolves. It may be beneficial to assign someone to review the metrics regularly (maybe on a bi-weekly cadence) to track change. This will help to stay on top of any upward or downward trends during the product's lifecycle and pivot if needed.

🚨Important Note: It's important that your success metrics align with your work and the companies goals. Misalignment with your success metrics and the companies goals can become an opportunity cost.

Positively Influencing a Company's Success Metrics 🫶

Metrics should and will change this shows the product's growth. I would get buy-in early on in the process. I wouldn't wait until you see people chasing different goals to start a collaborative cross-functional approach. It's important to get broad buy-in across all departments from the very beginning to make sure the success metrics identify the customer's needs and problems. Having a cross-functional collaborative process when changing or deciding which metrics to track will ensure product success.

Concepts and Frameworks

As a product manager you will see a lot of different metrics and not all are beneficial. It's important to understand when a metric will benefit the company and when it just looks good, but adds no real value.

Vanity metrics are such a metric that sound useful and look great, but ultimately don't help measure product performance.

For example, if you are launching a new product the first thing you want your users to do is engage with it so you will need them to perform the core task---maybe posting a photo. It's great if you received 1,000 downloads and even though this shows awareness of the application it doesn't mean the user actually opened the application and used your product. Maybe only 5 people out of the 1,000 users that downloaded actually engaged with your product. This is a vanity metric.

Actionable metrics are real data that can be used to make decisions and give you actionable insight on how your product is doing and what steps need to be taken either good or bad.

Good Metrics are actionable, usually time boxed (weekly or monthly) and go by per customer (avg. revenue per user/apru)

Pirate Metrics

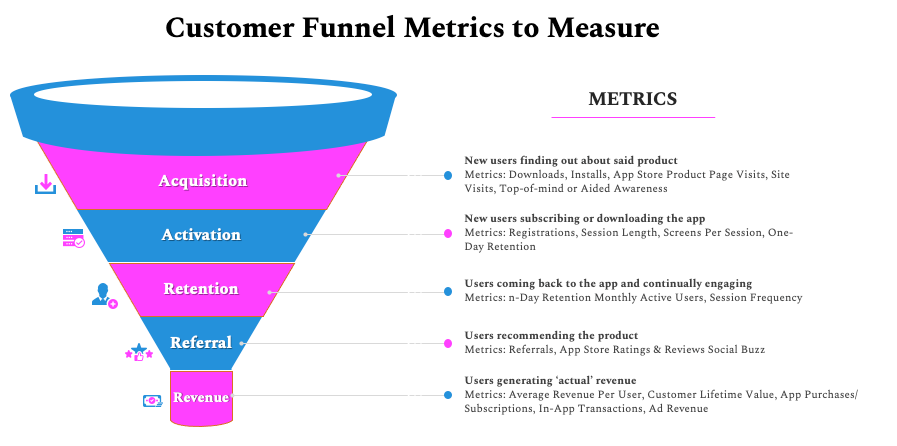

AARRR Pirate metrics is a framework by Dave McClure that is used to track five user behavior metrics throughout the customer lifecycle. These are acquisition, activation, retention, referral and revenue. These metrics are referred to as funnel metrics. The concept is that a leaky funnel will drip water so the idea is that you start at the top of the funnel and the customers that make it to the bottom without "leaking out" are the ones that generate actual revenue.

🚨Important Note: These metrics are especially beneficial to better understand a users Customer Journey. Especially in the revenue phase so that you can track the lifetime value of a customer (LTV) and compare it to the cost of acquiring that customer (CAC). There is a 3:1 ratio when comparing these two metrics in that LTV:CAC should be at a minimum 3:1.

A/B Testing Metrics

A/B testing also known as "split testing" is a randomized experimentation exercise which involves two variants (versions) of a product. These variants are shown to different users to determine which version leaves the greatest impact and drives success metrics.

When testing two random samples of users it allows you to get feedback from a smaller number of users within a certain time frame without hurting your success metrics if this 'temporary' change isn't something that users are happy with. It's a way to dip your toe in the sand before you make a 'permanent' change to users experience. This experimentation process is similar to a beta launch or a soft launch.

A/B testing compares multiple versions of a product by testing a users response to Variant A (control) against Variant B (variation) by determining which of the variants is more effective. For example, if you are re-designing your checkout cart this would be considered the new version of the original testing variable and the original checkout cart would be the controlled version of the original testing variable.

"In A/B testing, A refers to ‘control’ or the original testing variable. Whereas B refers to ‘variation’ or a new version of the original testing variable."- VWO

A/B testing helps to confirm what a user actually does as opposed to what they say they do and can be incredibly useful to validate the real impact your launches will have.

🚨Important Note: Even though A/B testing can be beneficial, you do not want to test everything. If used too often it can confuse the user and create frustration. A/B testing should only be used in certain circumstances where there are changes in high traffic or potential changes in sensitive parts of the product. This can include onboarding or monetization work flows that can impact product revenue.

Final Words 📖

The metrics and frameworks that are used for data insight help to validate hypothesis, create new opportunities, and ultimately how your users interact with your product in the real world. Aligning all these are only possible through actual data.

Although it's extremely important to understand users intimately and be able to interact with them, data insight confirms if what is said is what actually happens. As you define your success metrics and validate them through various channels you'll be able to explore the data to find opportunities.

Next Steps 🚀

- Vanity vs Actionable Metrics

- AARRR Pirate Metrics Framework

- A/B Testing best practices